Another Silent “Reset”: Equipping Human(itarian)s and AI to Serve the Forgotten Children in fragile contexts

Dr. Kathryn Taetzsch explores the transformative impact of artificial intelligence on the humanitarian workforce, urging a proactive and ethically grounded response to its rapid integration. While AI is enhancing efficiency in disaster response, climate forecasting, and displacement prediction, it cannot replace the human-centric values—empathy, adaptability, and community focus—that define humanitarian work. She highlights the ‘silent reset’ faced by the sector, where AI’s rise risks deepening inequalities and displacing routine jobs unless humanitarian organisations invest in upskilling, ethical governance and locally led innovation.

In March 2024, the Boston Consulting Group asked: “What will work be like in the next 50 years?” Their vision included AI-enabled nature-friendly economies and mental health prioritisation. Yet, it overlooked a critical sector: humanitarian and development workers—those who uphold human-centred values in increasingly tech-driven environments. AI adoption in the charity sector is surging. In the UK, 61% of charities used AI in 2024, up 34 percentage points in just one year. While debates rage over ethical boundaries and the risks of irreversible harm, the humanitarian sector—rooted in compassion and justice—offers a values-based framework for navigating this fundamental change.

So far, humanitarian expertise and skills have come to bear with incredible complexity, fragility, geopolitical power shifts, innovation and dramatic budget cut pressures, substantive workforce redundancies in this time of protracted poly-crisis. So, should humanitarians be ready to embrace yet another tech-challenge that may exacerbate speed, complexity, and upend humanitarian workforce “identity”?

As AI is said to potentially soon be outpacing human capability – a tall order for overstretched local and global humanitarian frontline staff amidst funding cuts, to catch up with risks, opportunities (70% of employees in the corporate sector believe that genAI will change 30% or more of their current work).

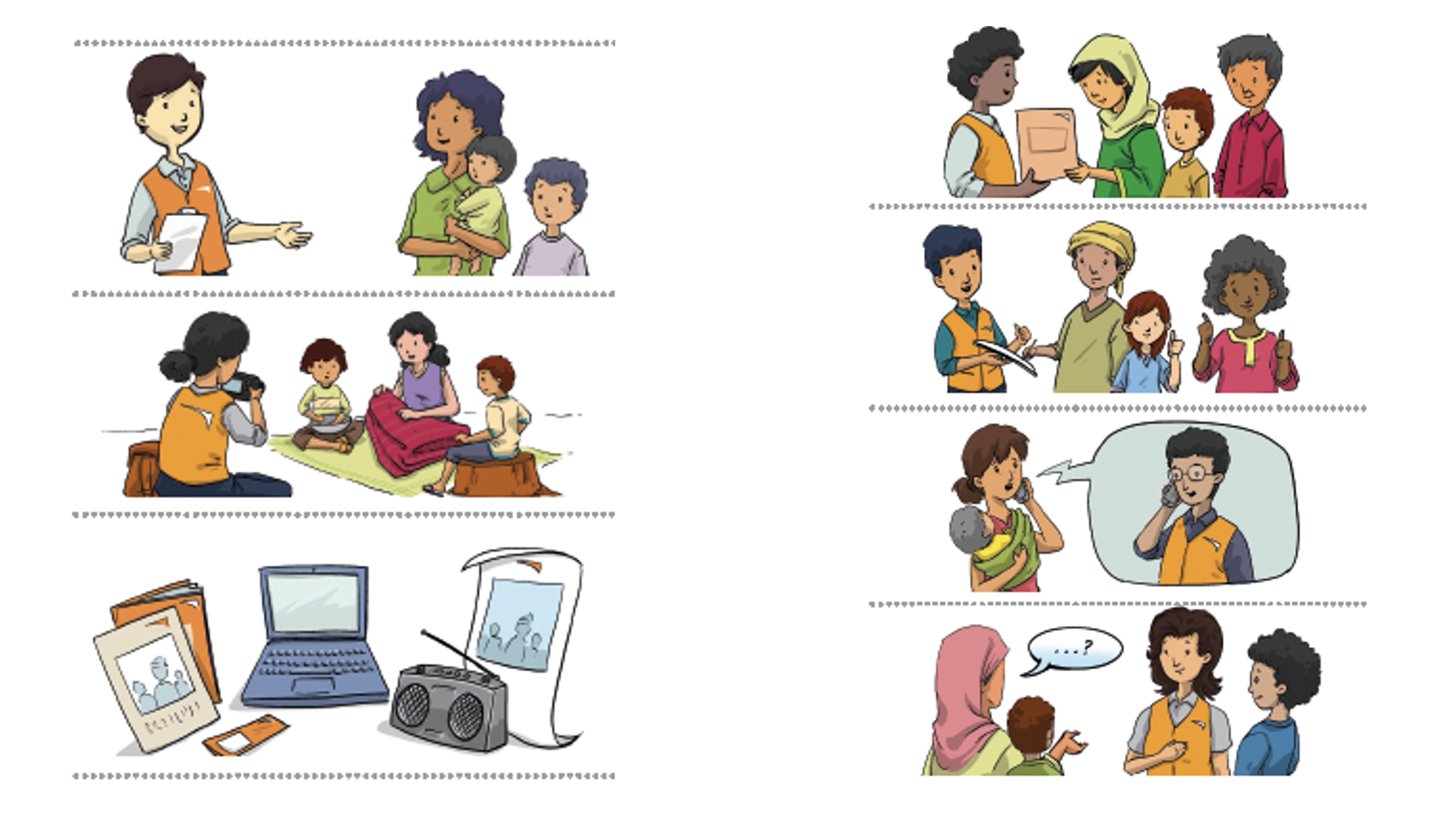

Humanitarians already use AI to improve disaster preparedness, forecast climate change impact, detect disease outbreaks, and predict displacement flows. AI-powered chatbots guide affected populations to assistance points, while multi-layered analysis helps tailor responses. These tools make humanitarian work more agile and efficient—but they cannot replace the soft skills that define humanitarianism: adaptability, empathy, problem-solving, and community focus.

Ethics and Accountability in AI Use

Despite these trends, there is an increasingly “powerless” debate regarding ethical AI-use along the principles of transparency, accountability, inclusivity and diversity, and the need for respecting data privacy. The ethical dilemma persists. Informed consent from disaster-affected populations, data privacy and transparency to AI-powered system data analysis, determining assistance remain under-addressed. The AI Humanitarian Ethics Framework offers guidance, but operationalising it is urgent. World Vision is training staff through initiatives like Data Classes and the Humanitarian Data Academy to strengthen data governance.

Impact on humanitarian jobs and workforce efficiency

Humanitarians would outrightly distance themselves from the use of AI-facilitated technology that could cause harm, possibly to vulnerable children we try to serve. But when it comes to harmonising AI with human capacities, AI-facilitated tech’s omnipresence raises uncomfortable questions. Will it lead to redundancies among aid workers performing routine tasks—finance, HR, data analytics, proposal writing? The World Economic Forum predicts 44% of the global workforce will be disrupted by AI between 2023 and 2028. Humanitarian workers are not immune, so, who is influencing this narrative, who is “left behind”? Is the algorithm considering any “duty-of-care"?

Yet, AI enhances, increases efficiency and effectiveness—and does not replace—human expertise in regular humanitarian and development programming to reach the most vulnerable children. For example, the WV co-developed “Integrate”/ AI-powered tool provides real-time support for ‘Education in Emergencies’ and ‘Child Protection in Humanitarian Action’ field experts in multiple languages, with Standard Operating Procedures (SOPs), Concept notes, step-by-step guides for context-sensitive programming, seen as complementary tools. Ultimately, the exercise is to support humanitarian workers to acquire relevant AI competency and skillsets.

The skills gap and Localisation in a rapidly changing humanitarian landscape

Do humanitarian workers—either a field monitor or a response director—understand how algorithms and AI programmes align with principles like fairness, accuracy, and auditability? Are humanitarians ready for the future? A 2025 survey by DataFriendlySpace found that 64% of humanitarian staff had little to no AI training, though 73% identified it as a top priority.

In the shadow of shrinking aid budgets and increasing protracted global crises, the humanitarian sector is undergoing a seismic shift—one led by algorithms. As artificial intelligence becomes cheaper and more accessible, particularly in the Global South, we are witnessing what the World Economic Forum calls an “unbundling effect”: the cost of expertise plummeting by up to 90%, and with it, the traditional structures of humanitarian work. Young people living in fragile contexts, refugee camps, or moving among displaced populations are not waiting for permission to innovate. They are building and deploying AI-powered tools to connect, deliver assistance, train communities and rebuild futures. While India now holds the highest concentration of AI skills globally, according to a UNDP report, Ukraine’s tech-savvy youth are leading start-ups amidst conflict. These are examples of a locally-led community that are not passive recipients of aid but active architects of a new humanitarian paradigm.

The conversation is broader than automation replacing jobs and is more about redefining what humanitarian assistance looks like. Trends indicate the disparity between the AI-literate” “haves” and “have-nots” is on the rise and is geographically dispersed. Without equitable access to AI tools and training, we risk reinforcing the very inequalities humanitarian work seeks to dismantle. At the same time, in fragile contexts, limited internet access is not the only barrier; the misuse of AI tools is a concern. When weaponised, it can endanger the lives of humanitarian actors, most vulnerable children and families in conflict zones. Principled governance must go hand in hand with technological literacy. Humanitarian staff must now navigate a complex terrain: choosing tools that are contextually relevant, ethically sound, with low-tech solutions, locally-led (UNDP) and globally facilitated.

Will AI-powered tools replace human(itarian) action?

The short answer: no. AI will become routine in recruitment, programme design and analysis. It can personalise learning journeys by identifying skill gaps, suggesting blended learning components, etc. At the same time, AI-facilitated recruitment of talent (JDs produced with relevant and contextual prompts, automated application and CV review, pre-screening via virtual bot-facilitated candidate interviews) can streamline hiring, especially during rapid recruitment and onboarding in a large-scale humanitarian disaster response. However, human oversight is still imperative—especially in data processing and ethical decision-making that ensures quality outputs, underpinned by ethical principles and practice.

What’s Needed Now?

Despite years of debate, progress on global AI governance has been slow. The UN’s AI Governance Compact aimed to include civil society voices, but progress is slow and operationalisation remains limited.

Aid organisations must act swiftly to:

- Upskill staff through informal cross-learning and cross-functional platforms and integrate AI into emergency preparedness simulations.

- Facilitate reverse mentoring through local thought-leadership. For example, leveraging AI experts from countries like Kenya and India that have cutting-edge knowledge and the highest AI skill concentration, can support rapid adaptation of local and global organisations in crisis contexts with relevant ethical considerations and safeguards.

- Educate senior management on AI risks and opportunities, enabling effective AI governance frameworks (https://ethicai.net/the-safe-ai-project-a-responsible-ai-framework-for-humanitarian-action) and playbooks that balance efficiency gains that allow resources to be redirected to saving lives and local resourcefulness that contributes to harnessing field-reality-based use cases.

The humanitarian sector must embrace this silent reset, not as a threat, but as an opportunity to reimagine aid: one that is digitally inclusive, ethically grounded, and led by those closest to the crisis or else it risks undermining the very values humanitarian work stands for.

About the Author:

With over 25 years of experience in humanitarian response and academic research, Dr Kathryn Taetzsch brings deep expertise in fragile and crisis-affected contexts across Africa, Asia, the Middle East, and the Americas. Her work spans strategic leadership and operational delivery in conflict zones and natural disasters, with a strong focus on advancing inclusive, locally led solutions. Kathryn has shaped global discourse through contributions to key humanitarian forums and publications, championing shock-responsive social protection, the Humanitarian-Development-Peace Nexus and financial inclusion for vulnerable families.